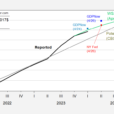

The Big Data problem is accelerating, as companies get better at collecting and storing information that might bring business value through insight or improved customer experiences. It used to be a small specialist group of analysts that would be responsible for extracting that insight, but this is no longer the case. We are standing at a nexus between Big Data and the demands from thousands of users – something that we call “global scale analytics” at MicroStrategy. The old architectural approaches are no longer up to the task and this new problem needs radical new technology. Continuing with the old approach Big Data will fail to reach its true potential, and just become a big problem for companies.

Analytics applications now regularly serve the needs of thousands of employees to help them do their job; an employee can need access to hundreds of visualisations, reports and dashboards. The application must ready for a query at any time, from any location and the results must be served to the user with ‘Google-like’ response times; their experience of the web is the benchmark by which they judge application responses in the work environment.

With this huge rise in data and user demands the traditional technology stack simply can’t cope, it is becoming too slow and expensive to build and maintain an analytics application environment. Sure, there are some great point solutions, but the problem is the integration between every part of the stack – the stack only performs as well as its weakest link.

The industry has only been working to solve half the problem, data collection and storage, rather than looking at the full picture which also includes analytics and visualisation. Loosely coupled stacks scale poorly and have a huge management and resource overhead for IT departments, making them uneconomical with poor agility.

Looking at the end-to-end Big Data analytics problem requires an architecture that tightly integrates each level of the analytics stack, taking advantage of the commoditisation of computing hardware to deliver analytics that can scale with near perfect linearity and economies of scale, to deliver sub-second response times on multi-terabyte datasets.

Read more: Big Data: Time for new approach to analysis